Creating a blended/ average face based on multiple faces

- Jul 8, 2020

- 2 min read

Updated: Jan 22, 2021

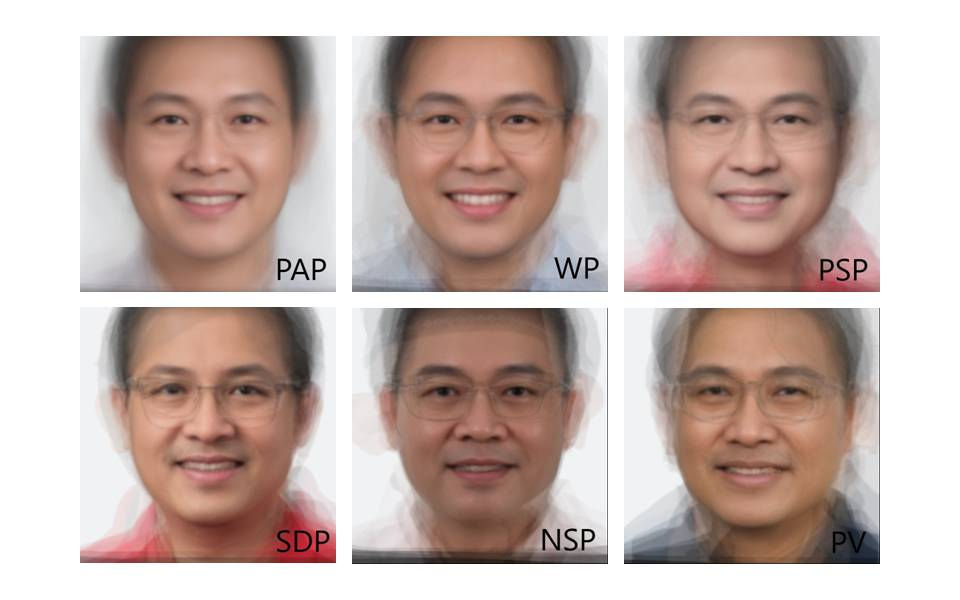

With current deep learning algorithms, we can create a new (averaged) face based on photos of multiple faces and this can be done easily. I decided to try this out on the candidates of the various parties running for the General Election in Singapore.

Data is scraped off: https://www.straitstimes.com/multimedia/graphics/2020/06/singapore-general-election-ge2020-candidates/index.html.

Here's the Average Face of the various parties. Order of photos in no particular order. And this is done only for parties with at least 10 candidates.

The code for scraping the profile pictures of candidates is as follows. The photos will be automatically downloaded into your local folder as you run the script.

from urllib.request import Request, urlopen

import requests

from bs4 import BeautifulSoup

import csv

import shutil

import re

import time

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

url = "https://www.straitstimes.com/multimedia/graphics/2020/06/singapore-general-election-ge2020-candidates/index.html"

options = Options()

options.add_argument('--headless')

options.add_argument('--disable-gpu')

driver = webdriver.Chrome(options=options)

driver.get(url)

time.sleep(3)

page = driver.page_source

driver.quit()

soup = BeautifulSoup(page, 'html.parser')

profile_pics = soup.find_all('img')

for i in range(31,369):

if(profile_pics[i]['alt']!=''):

pic_url = profile_pics[i]['src']

pic_name = profile_pics[i]['alt'].replace(' ','_')

pic_name = pic_name.replace('"','_')

r = requests.get(pic_url, stream=True, headers={'User-agent': 'Mozilla/5.0'})

if r.status_code == 200:

with open(pic_name+pic_url[-4:], 'wb') as f:

r.raw.decode_content = True

shutil.copyfileobj(r.raw, f)

print(i)In this case, before using BeautifulSoup to do the webscraping, I have to make use of selenium to load the page entirely first. Without it, I can't see the html of the entire page and am unable to detect/ scrape the html tags of the profile pictures. This has got to do with the way the webpage was designed where the page loads dynamically. If you need help with installing selenium, check out this post. After you have installed selenium and downloaded the appropriate chromedriver and placed it in the correct directory, you should have no problem running the code.

Also, if you face an error message like this (like me), SessionNotCreatedException: Message: session not created: This version of ChromeDriver only supports Chrome version 81, check that you have a chromedriver that is compatible with your Chrome version (chrome://version/).

For the face mixture/ blending/ averaging (whatever you called it), I tried to make use of this open-source library called facemorpher but it didn't work out for me (the average background didn't work). So I tried another open-source library called facer and it worked for me. We will need these libraries, OpenCV and dlib. I'm using Windows, so to install OpenCV, run pip install opencv-python. And if you run into issues installing dlib, check this out. As mentioned by the creator of Facer, really the tough part is to get the necessary modules/ libraries installed, after which things are easy. Depending on where you have saved the profile pictures, just remember that you have to update the folder path in the code example provided on the github page of Facer.

References:

https://stackoverflow.com/questions/52687372/beautifulsoup-not-returning-complete-html-of-the-page

https://stackoverflow.com/questions/60296873/sessionnotcreatedexception-message-session-not-created-this-version-of-chrome

Comments