Web scraping using selenium - Process - Python

- Oct 9, 2018

- 2 min read

Updated: Feb 7, 2021

In addition to BeautifulSoup, selenium is a very useful package for webscraping when it involves repeated user interaction with the website (eg. to click to select options from certain dropdown list and submit) to generate a desired output/ result of interest. Selenium will help automate the user action. Specifically, for this exercise, we will create a script that allows us to (i) Select the Boarding Station and Alighting Station, (ii) Submit, (iii) scrap the results for fares and estimated travel time into a csv file. Python 2.7 is used.

This exercise will build on the previous one involving BeautifulSoup. If you're not familiar with BeautifulSoup, do read up the post first, as we will also be using BeautifulSoup here.

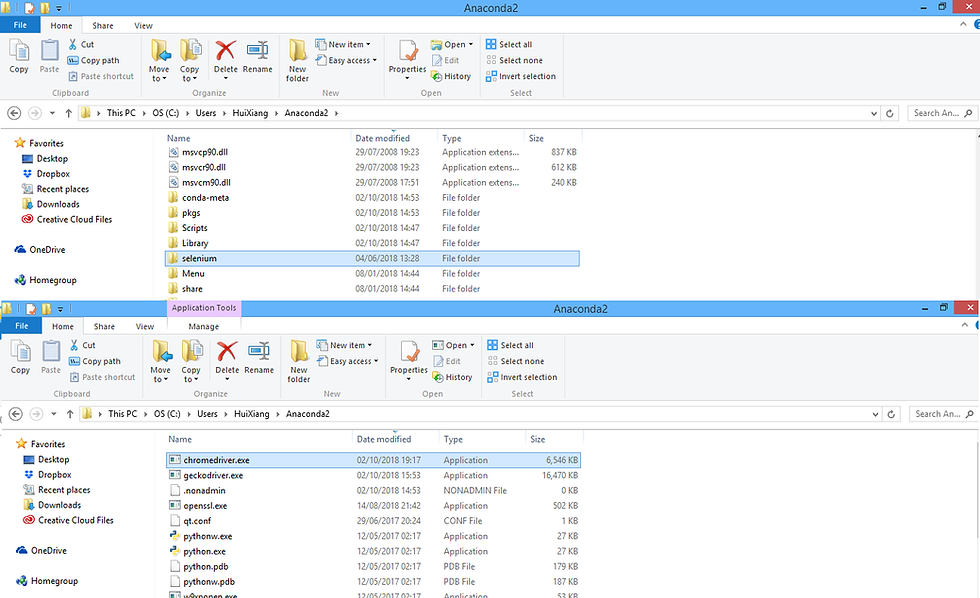

Instructions on how to install selenium can be found here. Other than selenium, there are other things that needs to be downloaded as well. It depends on whether you choose to use firefox or chrome as your browser. I ran into issues using firefox as the webdriver (probably because I didn't have firefox installed on my laptop). So in my exercise, I used chrome as the webdriver. The various drivers can be downloaded following this link.

Make sure that selenium and the driver is downloaded into the PATH where your code is pointing to. If you realised that pip install did not install selenium into the desired PATH, search for selenium (like how you would search for a file/ folder), copy the folder and paste into the PATH. Else, you would run into error messages such as ModuleNotFoundError: No module named 'selenium', 'geckodriver' executable needs to be in PATH, or Message: Unable to find a matching set of capabilities. (all of which I ran into!).

Running the script opens a browser and closes it after successfully scraping the results from the page.

If you're using firefox, just change webdriver.Chrome() to webdriver.Firefox(). The code can also be found here.

To get the name of the element for the dropdown sections and submit button, right-click their respective position on the page and select inspect.

We can see the name of the dropdown for < Select Boarding Station > is mrtcode_start and that for the "Submit" button is submit.

[/Edited on 13 Oct 2018] After some data exploration, we note that the time taken from Station A and Station B is similar as that from Station B to Station A. Hence it is not necessary to loop through all stations but only half.

The cleaned complete dataset can be found here.

Resources:

https://selenium-python.readthedocs.io/

https://medium.freecodecamp.org/better-web-scraping-in-python-with-selenium-beautiful-soup-and-pandas-d6390592e251

https://stackoverflow.com/questions/40208051/selenium-using-python-geckodriver-executable-needs-to-be-in-path

Comments